Editing La Commedia – Part 2

I’m back from Amsterdam and we’ve finished editing La Commedia. A few weeks ago I described the basic setup we were working with. The whole thing worked beautifully. We almost never rendered anything. There were times when playing back a freeze frame while 2 other video streams were running would cause dropped frames, but that just required a quick render on the 8-core Mac Pro we were working on. The finale of the show finally uses all 5 screens at once in a seizure-inducing extravaganza that also included some effects, so that needed rendering as well. But for the majority of the show I was able to edit up to 4 streams of resized HD video in realtime.

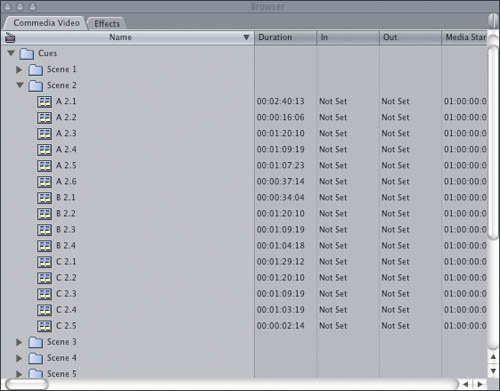

This is an example of the format we used during editing. Each picture represents one of the 5 screens that will be in various locations throughout the theater. The “C” screen is not in use in this example.

The real difficulty in this project was creative. The opera doesn’t really have a clear narrative like a traditional opera. It’s more fragments of ideas that all relate to a theme. Hal Hartley, the director of the show, came up with a separate story for the film that was inspired by the ideas in the opera. But while there’s a clear relationship between the two, the film is definitely not just an illustration of what the people are singing about on stage. And I usually had no idea what anyone was singing about anyway since we edited to MIDI recordings of the score.

As we started editing there were a lot more decisions to be made than usual. In a movie you can take for granted the fact that you’re going to have an image on screen most of the time. You might have a few seconds of black here and there, but in general movies tend to have something going on all the time. But with a stage production, you might not have any video at all for several minutes while the audience focuses on some activity on the stage. And if we do want to show some video, we have 5 different screens to choose from. And some portions of the audience can’t see some of the screens. Some of the audience sees the back side of some of the screens, so the image is in reverse and we can’t put text on those screens. It was all very tricky.

But we persevered and in the end came up with something that I’m really proud of. We finished editing after 4 weeks and then started the complicated work of handing over the video to the stage management crew. For each portion of video on each screen we had to determine at what point in the score the video should start and end, and what should happen if the video ends before we get to the out point in the score. Since it’s live music, the orchestra is going to play at slightly different speeds every night, and generally will play it slower than the MIDI recording. Usually we planned to have the video freeze on the last frame and wait until the next cue comes along.

I then broke the video down by screen and cue. Whenever there was a start or stop cue in the music I created a separate timeline.

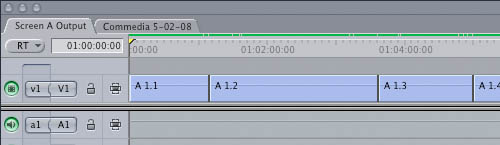

I then nested each of the timelines by screen. All of the “A” cues were nested in one timeline, and so on. I put about a second of black between each one, making sure that each started at 00 frames just to make typing in the timecode simpler.

I then output each of the timelines to a separate Doremi V1 hard drive recorder via SDI from the Kona 3 card. Screen A was an HD V1 and screens B-E were downconverted to anamorphic PAL SD. Each screen’s timecode started on a different hour to avoid confusion.

Once all the video was on the hard drives, I output EDLs which had stop and start timecode for each of the nested clips. Peter, who will handle video playback during the performances, then entered the timecode into the Doremi machines to create separate clips that can be recalled automatically and can stop when they finish, or loop as needed.

We then played the MIDI recordings in a room with the stage managers and Peter and 5 LCD monitors. The stage managers started to get the hang of where in the music the video stops and starts, and Peter figured out the exact requirements for each cue’s stop and start, and what comes before and after. We then had Rutger come in to program a Medialon show control system in order to automate the stopping and starting and looping and freezing. That way you can do multiple things with one press of the button. For example, one cue could require simultaneously starting video on 3 different screens, which is not easy for one person to do. Eventually it all came down to about 60 cues in the Medialon system.

And then I flew home the next day. There’s a month of rehearsals and then I’m going back to watch the premiere in June.

Leave a Reply

Want to join the discussion?Feel free to contribute!